エンジニア向け

Utilize ChatGPT as Your Assistant for Python Programming Tasks.

Raihan Kabir

In the rapidly evolving world of programming, having a reliable and intelligent assistant can be a game-changer. Imagine having a companion that can effortlessly guide you through the intricate labyrinth of Python coding, helping you overcome challenges and unlocking new possibilities. Welcome to the realm of ChatGPT – your ultimate Python programming assistant. With its expansive knowledge and powerful capabilities, ChatGPT is poised to revolutionize the way you approach Python programming tasks. In this blog, we’ll explore how ChatGPT can be your indispensable ally, streamlining your coding journey and enhancing your programming prowess. Whether you’re a seasoned developer or a novice coder, join us on this exciting journey of harnessing the potential of ChatGPT for all your Python programming needs.

From this blog you will learn about:

- Preparing a simple data processing Python script

- Refactoring your Python code

- Extending your code

- Converting your code to an App

- Explain the code in detail

Preparing a simple data-processing Python script

In the world of programming, efficiency is key. With ChatGPT, you can input your code queries using natural language and receive accurate outputs instantly. This blog explores how ChatGPT serves as your interactive programming companion, offering coding convenience and streamlined output retrieval. Discover how this technology transforms coding into a seamless and effortless experience.

Initially, the goal is to experiment with loading a dataset that you can use for Deep Learning. This involved verifying that all dataset values were in numeric form or converting them if needed. Additionally, I checked for any missing values and proceeded to divide the dataset into separate train and test sets.

ChatGPT prompt:

Create a Python script that accomplishes the following tasks using ChatGPT prompt:

- Reads a CSV dataset from the disk and stores it in a Pandas DataFrame.

- Validates that the dataset exclusively contains numeric values; transforms any string values to numeric while keeping a record of this conversion in a dictionary for future reference.

- Detects missing values within the DataFrame and replaces missing values in any entry with a single missing value; entirely removes any entry with more than one missing value.

- Divides the dataset into separate test and training sets.

Certainly! Below is a Python script that accomplishes the tasks you’ve described using the Pandas library:

import pandas as pd

import numpy as np

# Read CSV dataset into a Pandas DataFrame

csv_file_path = 'path/to/your/dataset.csv'

data_df = pd.read_csv(csv_file_path)

# Validate and convert string values to numeric

conversion_dict = {}

for column in data_df.columns:

if data_df[column].dtype == 'object':

try:

data_df[column] = pd.to_numeric(data_df[column], errors='raise')

except ValueError:

conversion_dict[column] = data_df[column].tolist()

data_df[column] = np.nan

# Preprocess missing values

data_df = data_df.fillna(np.nan)

data_df.dropna(thresh=data_df.shape[1] - 1, inplace=True)

# Split dataset into training and test sets

test_ratio = 0.2

mask = np.random.rand(len(data_df)) < 1 - test_ratio

train_set = data_df[mask]

test_set = data_df[~mask]

# Print converted values for reference

print("Converted Values:")

for column, converted_values in conversion_dict.items():

print(f"{column}: {converted_values}")

# Print resulting DataFrames

print("\nTraining Set:")

print(train_set)

print("\nTest Set:")

print(test_set)

Remember to replace ‘path/to/your/dataset.csv‘ with the actual path to your CSV dataset. This version of the script accomplishes the same tasks but without using separate functions.

Refactoring your Python code

let’s enhance the code by embracing the fundamental principles of effective coding, which include employing functions to logically structure your code, enhance reusability, and prevent unnecessary repetition. This approach not only promotes clean and organized code but also contributes to more efficient maintenance and development.

ChatGPT prompt:

Refactor the preceding script by incorporating a sequence of meaningful functions.

Certainly, let’s restructure the provided script using a series of functions that are logically organized and meaningful:

import pandas as pd

import numpy as np

# Function to read CSV dataset into a Pandas DataFrame

def read_csv_into_dataframe(file_path):

return pd.read_csv(file_path)

# Function to validate and convert string values to numeric

def validate_and_convert_numeric(df):

conversion_dict = {}

for column in df.columns:

if df[column].dtype == 'object':

try:

df[column] = pd.to_numeric(df[column], errors='raise')

except ValueError:

conversion_dict[column] = df[column].tolist()

df[column] = np.nan

return df, conversion_dict

# Function to preprocess missing values

def preprocess_missing_values(df):

df = df.fillna(np.nan)

df.dropna(thresh=df.shape[1] - 1, inplace=True)

return df

# Function to split dataset into training and test sets

def split_dataset(df, test_ratio=0.2):

mask = np.random.rand(len(df)) < 1 - test_ratio

train_df = df[mask]

test_df = df[~mask]

return train_df, test_df

# Main execution

def main():

# Path to your CSV dataset

csv_file_path = 'path/to/your/dataset.csv'

# Read CSV into DataFrame

data_df = read_csv_into_dataframe(csv_file_path)

# Validate and convert string values to numeric

data_df, conversion_dict = validate_and_convert_numeric(data_df)

# Preprocess missing values

data_df = preprocess_missing_values(data_df)

# Split dataset into training and test sets

train_set, test_set = split_dataset(data_df, test_ratio=0.2)

# Print converted values for reference

print("Converted Values:")

for column, converted_values in conversion_dict.items():

print(f"{column}: {converted_values}")

# Print resulting DataFrames

print("\nTraining Set:")

print(train_set)

print("\nTest Set:")

print(test_set)

# Execute the main function

if __name__ == "__main__":

main()

Extending your code

Alright, the script has gained an improvement with the integration of functions for better organization. Moving forward, let’s enhance the script slightly to allow users to define specific parameters during its execution.

ChatGPT prompt:

Revise the program provided above to request the following information through command line arguments, and subsequently incorporate these details into the program:

- The path and name of the CSV dataset file.

- The desired size for the train/test split.

- The threshold indicates the maximum allowable number of missing values per record; records surpassing this threshold will be dropped entirely instead of replacing the missing values.

Certainly, here’s the modified script that incorporates command line arguments for the dataset file path, train/test split size, and missing value threshold:

import argparse

import pandas as pd

import numpy as np

# Function to read CSV dataset into a Pandas DataFrame

def read_csv_into_dataframe(file_path):

return pd.read_csv(file_path)

# Function to validate and convert string values to numeric

def validate_and_convert_numeric(df):

conversion_dict = {}

for column in df.columns:

if df[column].dtype == 'object':

try:

df[column] = pd.to_numeric(df[column], errors='raise')

except ValueError:

conversion_dict[column] = df[column].tolist()

df[column] = np.nan

return df, conversion_dict

# Function to preprocess missing values

def preprocess_missing_values(df, missing_threshold):

df = df.fillna(np.nan)

df.dropna(thresh=df.shape[1] - missing_threshold, inplace=True)

return df

# Function to split dataset into training and test sets

def split_dataset(df, test_ratio):

mask = np.random.rand(len(df)) < 1 - test_ratio

train_df = df[mask]

test_df = df[~mask]

return train_df, test_df

# Main execution

def main():

parser = argparse.ArgumentParser(description="Process dataset and parameters.")

parser.add_argument("dataset_path", help="Path to the CSV dataset file")

parser.add_argument("--test_ratio", type=float, default=0.2, help="Test set ratio (default: 0.2)")

parser.add_argument("--missing_threshold", type=int, default=1, help="Missing value threshold for dropping records (default: 1)")

args = parser.parse_args()

# Read CSV into DataFrame

data_df = read_csv_into_dataframe(args.dataset_path)

# Validate and convert string values to numeric

data_df, conversion_dict = validate_and_convert_numeric(data_df)

# Preprocess missing values

data_df = preprocess_missing_values(data_df, args.missing_threshold)

# Split dataset into training and test sets

train_set, test_set = split_dataset(data_df, args.test_ratio)

# Print converted values for reference

print("Converted Values:")

for column, converted_values in conversion_dict.items():

print(f"{column}: {converted_values}")

# Print resulting DataFrames

print("\nTraining Set:")

print(train_set)

print("\nTest Set:")

print(test_set)

# Execute the main function

if __name__ == "__main__":

main()

With this version of the script, you can run it from the command line like this:

python script_name.py dataset.csv --test_ratio 0.3 --missing_threshold 2

Replace script_name.py with the actual name of your script and provide the necessary dataset file path along with any desired test ratio and missing threshold values.

Converting your code to an App

let’s imagine we’re aiming to prepare this code for a more professional context (just playing along here). We can also ask ChatGPT to help us integrate these functions into a Streamlit application.

Streamlit: is a Python library used to turn data scripts into interactive web applications, ideal for data science and machine learning projects. It enables easy creation of dashboards and visualizations without complex web development.

ChatGPT prompt:

Transform the previous program into a Streamlit app, giving users the ability to input the same arguments through the command line as mentioned earlier.

Here’s the previous code transformed into a Streamlit app, allowing users to input the same arguments via the command line:

import streamlit as st

import pandas as pd

import numpy as np

# Function to validate and convert string values to numeric

def validate_and_convert_numeric(df):

conversion_dict = {}

for column in df.columns:

if df[column].dtype == 'object':

try:

df[column] = pd.to_numeric(df[column], errors='raise')

except ValueError:

conversion_dict[column] = df[column].tolist()

df[column] = np.nan

return df, conversion_dict

# Function to preprocess missing values

def preprocess_missing_values(df, missing_threshold):

df = df.fillna(np.nan)

df.dropna(thresh=df.shape[1] - missing_threshold, inplace=True)

return df

# Function to split dataset into training and test sets

def split_dataset(df, test_ratio):

mask = np.random.rand(len(df)) < 1 - test_ratio

train_df = df[mask]

test_df = df[~mask]

return train_df, test_df

# Streamlit app

def main():

st.title("Dataset Processing with Streamlit")

dataset_path = st.text_input("Path to CSV dataset file")

test_ratio = st.slider("Test set ratio", 0.0, 1.0, 0.2)

missing_threshold = st.slider("Missing value threshold", 0, 10, 1)

if st.button("Process"):

data_df = pd.read_csv(dataset_path)

data_df, conversion_dict = validate_and_convert_numeric(data_df)

data_df = preprocess_missing_values(data_df, missing_threshold)

train_set, test_set = split_dataset(data_df, test_ratio)

st.write("Converted Values:")

st.write(conversion_dict)

st.write("Training Set:")

st.write(train_set)

st.write("Test Set:")

st.write(test_set)

if __name__ == "__main__":

main()

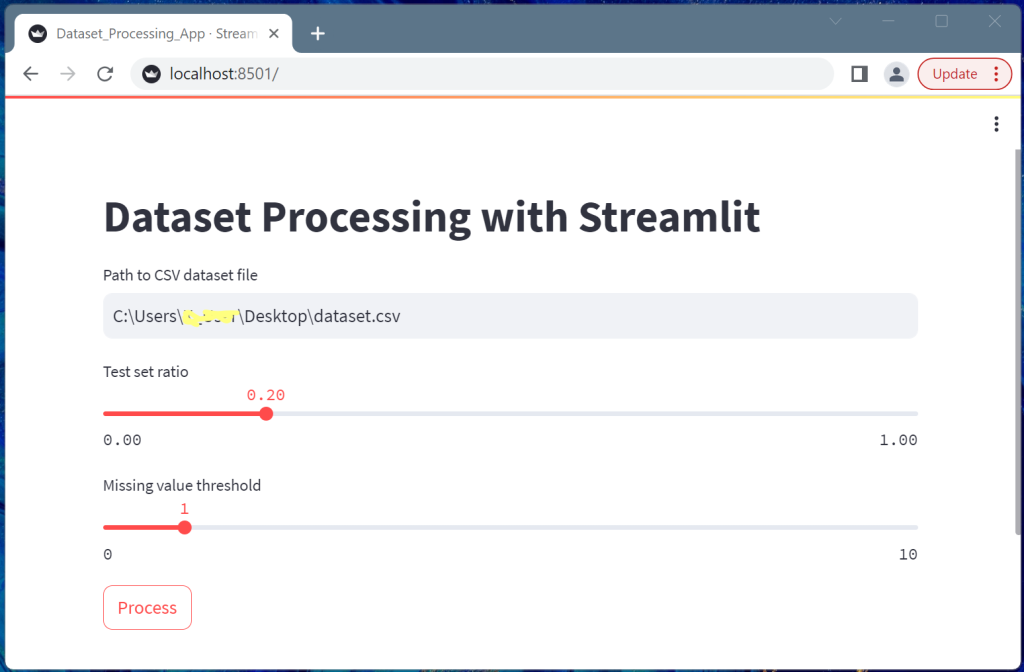

This code has been adapted into a Streamlit app, providing users with input fields for dataset path, test ratio, and missing threshold. After pressing the “Process” button, the app will display the processed data, converted values, training set, and test set.

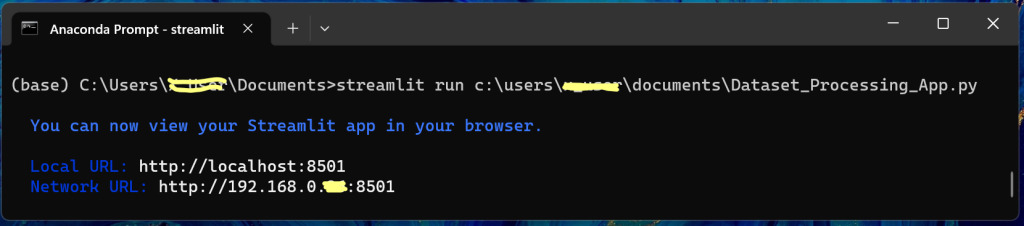

Run the code from Command Prompt (any OS):

Application output (any web browser):

Explain the code in detail

We can also ask ChatGPT to explain the code in detail (step by step) with explanation.

ChatGPT prompt:

Explain the code in detail to understand properly.

Certainly, let’s go through the code step by step to explain how it works:

import streamlit as st

import pandas as pd

import numpy as np

- Here, we import the necessary libraries: streamlit for building the web app, and pandas and numpy for data processing.

def validate_and_convert_numeric(df):

# Function to validate and convert string values to numeric

conversion_dict = {}

for column in df.columns:

if df[column].dtype == 'object':

try:

df[column] = pd.to_numeric(df[column], errors='raise')

except ValueError:

conversion_dict[column] = df[column].tolist()

df[column] = np.nan

return df, conversion_dict

- This function, validate_and_convert_numeric, is used to convert string values to numeric in a DataFrame.

- It iterates through each column in the DataFrame and checks if the column’s data type is “object” (usually indicating string values).

- If so, it attempts to convert the column to numeric using pd.to_numeric. If conversion fails (raises a ValueError), the original values are recorded in conversion_dict, and the values in the DataFrame are replaced with NaN (Not a Number).

def preprocess_missing_values(df, missing_threshold):

# Function to preprocess missing values

df = df.fillna(np.nan)

df.dropna(thresh=df.shape[1] - missing_threshold, inplace=True)

return df

- The preprocess_missing_values function fills missing values in the DataFrame with NaN using fillna.

- It then drops rows with missing values exceeding the specified missing_threshold using dropna with the thresh parameter.

def split_dataset(df, test_ratio):

# Function to split dataset into training and test sets

mask = np.random.rand(len(df)) < 1 - test_ratio

train_df = df[mask]

test_df = df[~mask]

return train_df, test_df

- This function, split_dataset, randomly divides the DataFrame into training and test sets based on the given test_ratio.

- It generates a random mask using np.random.rand and compares it with the complement of test_ratio to determine which rows go into the training set.

The remaining rows form the test set.

def main():

# Streamlit app

st.title("Dataset Processing with Streamlit")

dataset_path = st.text_input("Path to CSV dataset file")

test_ratio = st.slider("Test set ratio", 0.0, 1.0, 0.2)

missing_threshold = st.slider("Missing value threshold", 0, 10, 1)

if st.button("Process"):

data_df = pd.read_csv(dataset_path)

data_df, conversion_dict = validate_and_convert_numeric(data_df)

data_df = preprocess_missing_values(data_df, missing_threshold)

train_set, test_set = split_dataset(data_df, test_ratio)

st.write("Converted Values:")

st.write(conversion_dict)

st.write("Training Set:")

st.write(train_set)

st.write("Test Set:")

st.write(test_set)

- The main function is where the Streamlit app is defined.

- It uses various Streamlit widgets to create an interactive user interface:

- st.title sets the app’s title.

- st.text_input creates an input field for the dataset path.

- st.slider generates sliders for the test ratio and missing value threshold.

- st.button adds a button labeled “Process” to trigger data processing.

- When the “Process” button is clicked, the app reads the dataset using pd.read_csv, applies the defined functions, and displays the results using st.write.

if __name__ == "__main__":

main()

- Finally, this line ensures that the main function is executed when the script is run directly.

Overall, this code creates a user-friendly web app with Streamlit where users can input dataset details and processing parameters, and then view the processed data, conversion details, training set, and test set by clicking the “Process” button.

Final notes:

In conclusion, make the most of ChatGPT as your assistant for Python/(several others) programming tasks. Throughout this blog, you’ve gained insights into the following key areas:

Preparing a Simple Data Processing Python Script: You’ve discovered the fundamentals of creating a basic Python script to process data using Pandas and NumPy.

Refactoring Your Python Code: You’ve explored the significance of organizing your code using functions to enhance readability, reusability, and efficiency.

Extending Your Code: You’ve learned how to build upon your initial script by incorporating additional functionalities to cater to specific requirements.

Converting Your Code to an App: You’ve delved into the process of transforming your script into an interactive web application using the Streamlit library.

Explaining the Code in Detail: You’ve comprehensively understood each code segment through a step-by-step breakdown, facilitating a clear comprehension of the script’s functionality.

Empower your Python programming endeavors by harnessing ChatGPT’s guidance and insights ranging from simple scripting to code optimization and application development.

2024/04/23

2024/04/23 2024/03/26

2024/03/26 2024/02/27

2024/02/27 2024/02/23

2024/02/23 2024/02/09

2024/02/09 2024/02/02

2024/02/02 2024/01/23

2024/01/23 2024/01/12

2024/01/12